“How do we know that what we designed was right?” I still clearly remember when one of our designers put this to the rest of the group during a roundtable discussion.

The question is both philosophical and practical.

Philosophically, UX design should strive to improve the lives of the intended audience, to make their interaction with technology easier or more pleasurable in some way.

Practically speaking, we also must remain cognizant of the quantitative results our designs deliver for the business.

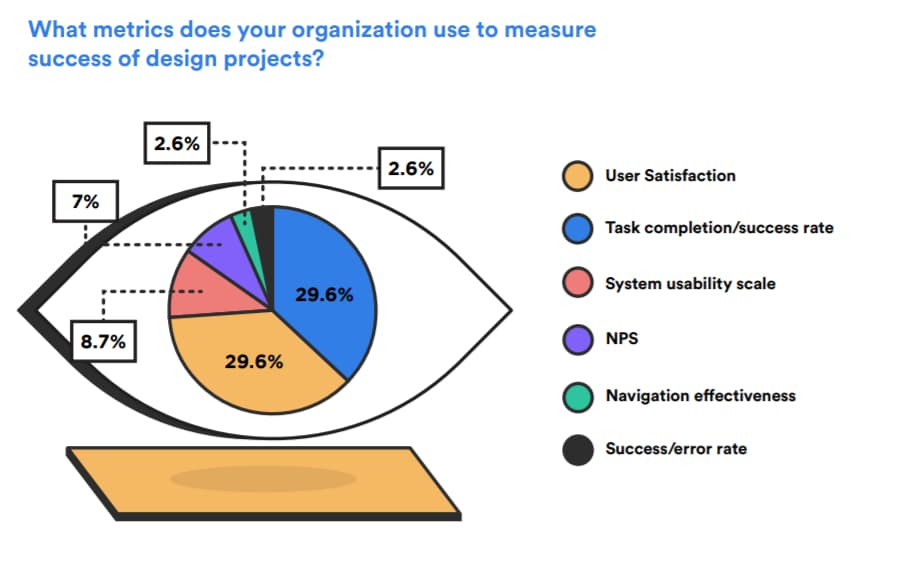

The conversation ended, but I wasn’t satisfied. So when we surveyed UX research, I added this question: “What metrics does your organization use to measure the success of design projects?”

Here’s what the designers told us:

As you’ll notice in the giant floating eye, there’s an obvious dichotomy in the two most popular responses. About 30% say they rely on user satisfaction as their primary success metric. Roughly the same percentage say they use task completion and success rate.

The difference between the two is important. User satisfaction is a self-reported measure, while task completion/success rate are performance measure. Let’s examine the difference between the two types of metrics and the role each plays in modern design work.

The importance of user satisfaction

User satisfaction is a self-reported metric, which means that the answers are subjective.

Self-reported metrics are unique to UX design; they’re also common in medical science. For example, psychologists might ask people to rate certain characteristics about themselves during a personality test, or physicians might ask patients to rate their pain.

Although these metrics are prone to bias, they are important.

User satisfaction lets us know how users feel about their experience with the application; it gets us that little bit closer to understanding how successfully we achieved our philosophical goal of improving people’s lives.

Since the most successful experiences connect with people on an emotional level, user satisfaction remains a relevant metric. For example, when we designed a virtual AI assistant at DePalma, we knew people wouldn’t use it unless the AI had some sort of personality and resonated with them emotionally.

User satisfaction is not completely divorced from usability. As Jakob Nielsen observed, these scores often, though not always, correlate with objective usability metrics.

A quick note, NPS is a separate answer in this question from user satisfaction, so the exact technique the respondents are using is slightly unclear. I guess that the majority are using something akin to what Nielsen describes in the link above, i.e., “On a 1-7 scale, how satisfied were you with using this application?”

The utility of performance metrics

On the other end of the spectrum, we have performance metrics. Measures like task on task, completion rate, and error rate.

These data points coalesce to form the true measure of your design’s usability. They give us a practical answer to the question of whether or not what we designed was right.

Although they’re quite useful for validating improvements in design, these objective measures can be difficult to obtain. Typically, you’ll need to recruit people for usability testing to establish how long they take to complete tasks and their success-to-error ratio.

Even then, your research will be most valuable when compared to historical benchmarks derived from previous iterations.

Still, the effort is well worth it when it’s time to prove the ROI of your work to business stakeholders. Some performance metrics are easier to obtain than others.

Conversion rate in particular is one that was brought up several times in the open text fields for this question.

While a conversion may be more difficult to quantify in an enterprise application, in B2C or SaaS design projects, conversion rate is an optimal performance metric, because the historical data (probably...should) already exist, and it’s something you can calculate without having to sit down and do a usability testing.

Plus, more conversions are about as close to direct ROI as UX design can get.

Is either one enough?

The respondents to our survey weren’t divided down the middle about this question, but there were two major camps along with a smattering of other answers.

In an ideal world, we would be able to measure how much our users liked our designs as well as how well our designers performed on an objective level. The combination of satisfaction and performance metrics is what gives us the most complete picture of whether a design was a success.

Unfortunately, we don’t inhabit an ideal world, which is why 72% of designers in this same survey said they weren’t pleased with the amount of time they got to spend on research.

With that perspective in mind, it’s easy to say, “No, philosophically we need both measures,” but when we’re all adhering to some form of constraints, sometimes one or the other has to be enough.